In my post, What is Growth: Its Role, Mandate & Strategies, I’ve discussed the disjointed distinction of Growth in the marketplace, not least between the US and the UK/EU. In this post, I’m aiming to zoom further in and look at the primary approach a Growth Team, or a Growth Squad, has at their disposal: data-driven growth experimentation and how to establish a testing and optimisation culture within your organisation.

Experimentation helps us to make more informed decisions about our ideas and projects. A common mistake is that people take their idea and run with it without testing the assumptions behind the concept.

We think we know, but quite often we don’t know, we just assume.UN HCR "Why there’s no innovation without experimentation"

From hacking to frameworks

In the early phases of a startup, focus is on moving fast and iterating to achieve traction and prove product-market-fit (PMF) by getting early adopters and revenue to your MVP.

Teams working in that phase of a startup are exposed to what can often be best described as organised chaos; quick trial and failure can help move the organisation forward. Speed is of the essence, and sometimes a small change can make a big splash.

The profile of the Growth Hacker is the driving force, where they are using creative, low-cost and unconventional tactics for achieving early, significant growth, primarily by acquiring and converting new customers.

As the startup scales and the product moves closer to and beyond PMF, the role and characteristics of the Growth Hacker become increasingly unsustainable. The organisation has now established a level of knowledge and confidence to operate from.

Making dramatic changes without a good idea about the outcome, and those early ‘quick-fire wins’, are difficult to repeat and won’t cut it anymore.

This is where “hacking” is being replaced by “structure” and “frameworks” and the Growth Hacker with a Growth Architect – of course, these titles are used here primarily descriptive.

In other words, culture and approach must transition from hacking (i.e.: gut-feel, quick wins) towards repeatability and sustainability that requires a new level of organisation and cross-functional collaboration. Frameworks replace intuition and instil defensibility. They transition from ad-hoc quick-fire wins to repeatable gains that allow for sustainable incremental growth.

Move away from testing in a vacuum

One method in the Growth toolbox for achieving repeatable and sustainable growth is to conduct experiments. Most growth marketers and product managers run tests to some degree – some more frequently and structured than others. However, this is also where theory, practice, and myth diverge.

Note my distinction between ‘testing’ and ‘experimentation’. To make growth experimentation work, it’s paramount to plan, execute, and manage the process properly. Growth Hackers and inexperienced growth teams often fail to take a planned, strategic approach to their tests. They effectively test in isolation with the aim of optimising individual touchpoints and channel KPIs.

Whatever the team’s vocabulary and intentions, they chase successful outcomes, not learnings. Volume and frequency of tests are often their modus operandi – not storytelling. Plainly put: their hard work will unlikely move the needle sustainably.

Worse, an occasional successful test result can make the unplanned, disjointed approach seem viable and camouflage real underlying issues.

These teams don’t run ‘experiments’; they conduct (more or less random) ‘independent A/B tests’ and they are operating in a silo, limiting outcomes to a single channel or element. This is slowing down the organisation’s progress.

That’s not to say these teams never conduct experiments. Highly experienced performance marketers test ads across bidding, creative, timing and audience. Other functions like Product, CRM, Sales do too. However, this is mostly done on a tactical channel level and is testing, not experimenting.

This raises the question of the difference between an experiment and a test.

Experimentation vs. Testing

The terminologies for testing and experimentation are often used interchangeably incorrectly. Where a test is conducted to quantify an attribute, an experiment is a systematic sequence of individual tests for examining a causal relationship, .

Experimentation should be all about learning. This involves formulating a hypothesis or an assumption seeking validation through a structured process that collects and analyses data.

An experiments can have multiple treatment groups with different variants of interventions or it can may have a single treatment group and a single control group, which we call an A/B test. Essentially, A/B testing is the simplest type of a controlled experiment and, on its own, is quite ineffective.

Once projects grow in size and impact and you’re likely moving from iterative experiments to include prototypes and big bets into your growth portfolio, you may want to plan several experiments into a hypothesis and north star.

Optimisation vs. Results

Another linguistic mix-up is using optimisation and test results interchangeably. To optimise, you need more than one result. Running a single test that didn’t completely fail does not mean you’ve optimised.

I hear it way too often, but a single test result is not an optimisation! Of course, it can be, but equally if a result is negative or inconclusive, it has not optimised. Let’s be accurate and use the correct terminologies.

Results are outcomes of a test. Optimisations, on the other hand, are measurable improvements from a series of aggregated or blended test results that are connected by a shared high-level objective. By its very nature this must include two or more test results over a specific time run on a single element.

Structure ≠ Complication

We’ve already established that hacking isn’t repeatable and to drive real growth impact it needs structured execution for cross-functional, hand-in-glove teamwork. This includes shared goals, shared and consistent language, aligned sprint planning, and it may also extend to collaborative tools.

First-time founders pressured to show progress or eager to repeat early hacking wins may be afraid to be moving to a structured, object-based experimentation approach in fear of stifling progress.

And there’s some truth to it. Applying these structures too early in a startup’s life can be too restrictive, unnecessarily complex and potentially have a negative effect. The key (as always) is knowing when to transition and while there is no hard-rule, I mentioned that the transition should generally happen when an organisation has some traction under their belt and is close to PMF.

Let’s be clear, the transition won’t be perfect initially. Adoption takes time and it will require pivots. However, if managed properly and phased-in, adoption can happen fairly quickly and steadily, delivering lasting impact. You need to bring everyone along on that journey. Opt for low-hanging fruits before scaling and adding too many and more complex experiments is a good idea. Check out How to evolve your experimentation portfolio over time – a section in a previous post.

Another aspect is continuous, internal knowledge transfer.

Growth Hackers are individual beacons; they come, they impact and they may eventually leave. As your organisation matures, you shouldn’t be relying on individual expertise alone. As people join and leave a team, knowledge mysteriously seems to appear and disappear with them. Fostering a structured growth culture stores acquired knowledge and helps to democratically transition knowledge from individuals to the team.

A strong growth experimentation programme unlocks several benefits:

- Get smarter about what really moves the needle

- Remove bias and personal intuition from roadmaps

- Quantify impact to focus engineering time on high ROI

- Reduce risk by only shipping validated features

- Enable quick iteration by rapidly testing and learning

Objective-driven growth experimentation

On average, only 1 in 5 experiments reaches statistical significance which you can also call a success or win. It’s tempting to view the rest as failures.

However, negative or inconclusive results can guide where not to invest more of your and your resources’ time. Where testing doesn’t reach statistical significance, it can eliminate weak ideas or reveal areas in need of refinement.

👉 What is statistical significance? Give yourself a quick refresher with this post from Optimizely and a post from Harvard Business Review (HBR).

So, instead of making each experiment an end in itself, take a phased, objective-driven approach.

- Start by defining a clear growth objective aligned to business goals.

- Formulate a data-informed hypothesis and set your north star.

- Finally, design a series of tests aiming to provide results.

- With the results you can track metrics towards the overall goal, and relegate the weight on individual tests.

This objective-focused experimentation framework removes luck and intuition as drivers of success and instead achieve the defensible, sustainable, repeatable growth discussed earlier. Eliminate chasing isolated wins.

In the next section I’m going to break down the process and framework.

Implementing a Growth experimentation framework

Having a formulated growth experimentation framework instils cross-functional alignment and guardrails of your process end-to-end and it guides on how ideas are generated, prioritised and backlogged before going live.

Hypothesising

Frame a testable problem statement and solution idea. Use a data-led approach as much as possible, considering quantitative and qualitative data.

There will be times when you’re low on data or have unreliable data – be upfront about it. You can use research and experience as a starting point. Crucially, start testing to mine control data to optimise against.

Battlecards containing specific experiment requirements are a great way to help build actionable hypotheses and achieve consistency across all your experiment plannings. Airtable is my preferred tool but use whatever you want.

Prioritising

Weigh potential experiments based on expected impact and required resources. Start using a simple framework, such as ICE or RICE.

Word of caution: When prioritising experiments across different channels, you may find that using ‘Reach’ as variable can disadvantage one idea over another. You can avoid this by prioritising by channel, or you can bundle experiments by an overarching objective, weighting Impact over Reach. Generally, I prefer opting for the first rather than the latter.

Planning & building

Design test setups and variations. Visualise ideas – scrappy is fine but explain your thinking. For complex experiments, flow diagrams clarify and avoid misunderstandings. Use tools like Miro for visualisation and Airtable for experimentation management.

Set up an experiment report for tracking your primary and secondary metrics. Have only one success metric. You can apply secondary “calibration” metrics to indicate progress towards the first.

You should also plan for scenarios where you may need to pause or terminate your growth experimentation. But don’t use it as backdoor for influencing results mid-experiment.

Executing tests

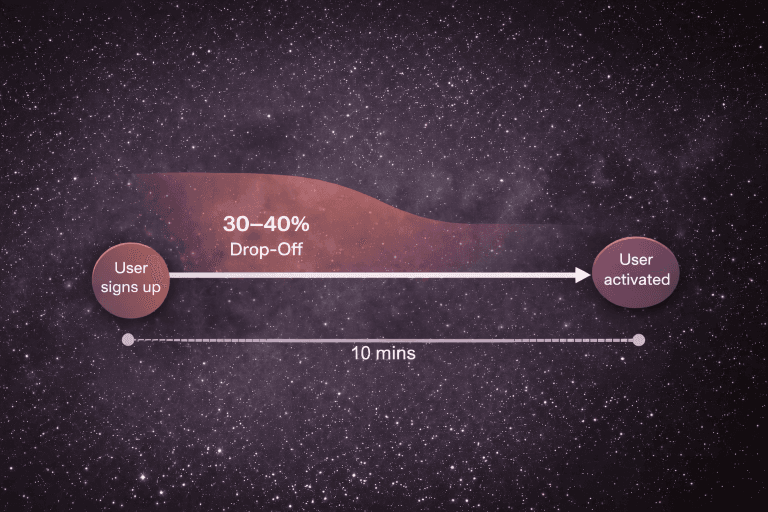

Launch the experiment and let it run for a set period. Don’t fool yourself or put unrealistic timelines forward. Experiment results are driven by the number of users entering your experiment funnel.

Calculate how many users you require to reach statistical significance and break this down into your (realistically) expected traffic.

No hard rules here, but I can’t see any good reason for an experiment to run less than 2 weeks. 2-weeks is your usual sprint-cycle and it aligns perfectly with the planning of your product and engineering teams.

My suggestion: Plan your growth experimentation in sprint cycles; 2-weeks, 4-weeks, etc. That is, if your organisation’s sprint is 2-weeks, of course. Otherwise, adjust accordingly.